Technical overview of the Oikotie service

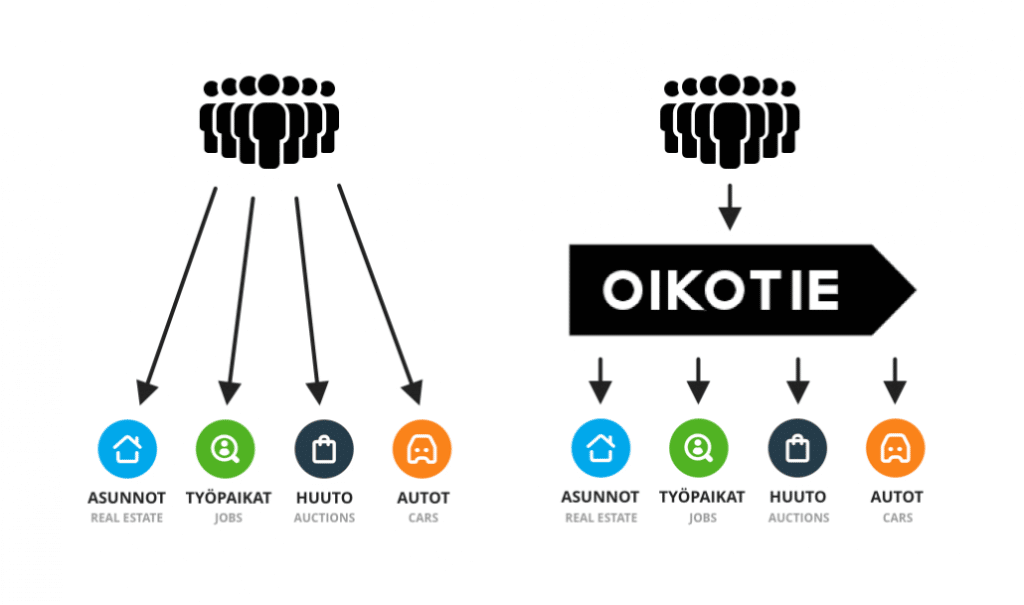

Around 2 years ago, Codemate and Idean started a project with Sanoma Digital Finland to develop a new cross-platform service that brings together the existing Oikotie services. The Oikotie ecosystem consists of several consumer-targeted verticals including, e.g.

- Oikotie Asunnot (to buy, sell and rent real estate)

- Oikotie Työpaikat (for job advertisements)

- Huuto.net (an online auction platform) and

- Autotie (to buy and sell cars).

Oikotie has more than 1 million weekly visitors. According to Finnish Internet Audience Measurement (FIAM), Oikotie is one of the most popular web service in Finland and reaches on a monthly level around 2 million people.

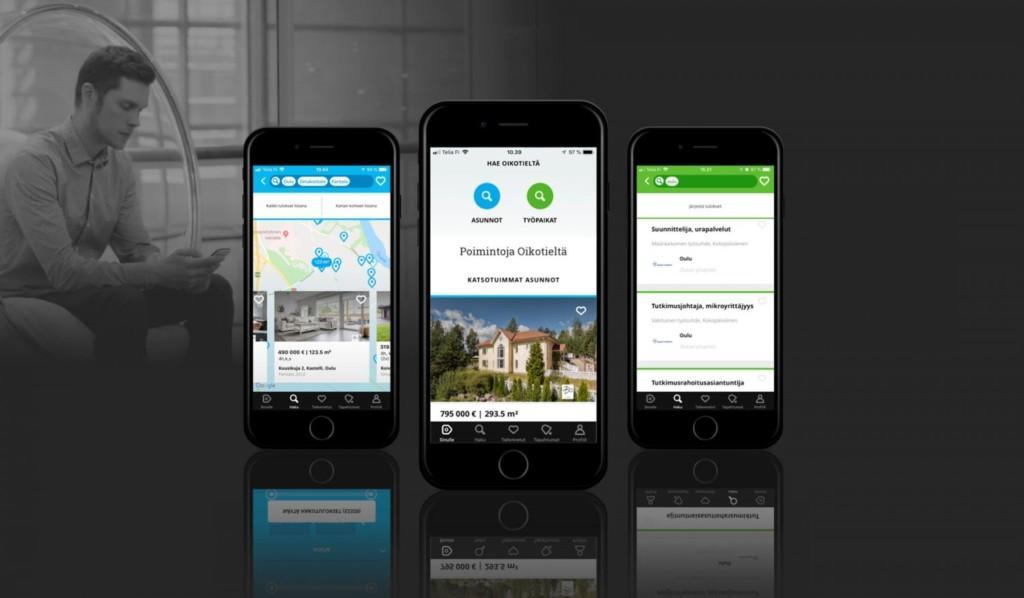

The goal of the project was to provide the user with a personalised view of all Oikotie verticals in a single application. Idean was responsible for UI/UX design, and Codemate provided the technical implementation of the system. Personally, I saw it as a great opportunity to be a lead developer of a project of this scale. In this blog post, I’ll give an overview of the backgrounds of the projects, and the technical solution and software architecture that were used to build the new service.

Bringing it all together…

To show content from all the different verticals, the new service needed to be fully integrated into the existing ecosystem of Oikotie products. All Oikotie verticals are loosely coupled and function independently. Furthermore, the data related to the existing Oikotie services is stored and managed by the verticals and can be accessed through vertical-specific APIs.

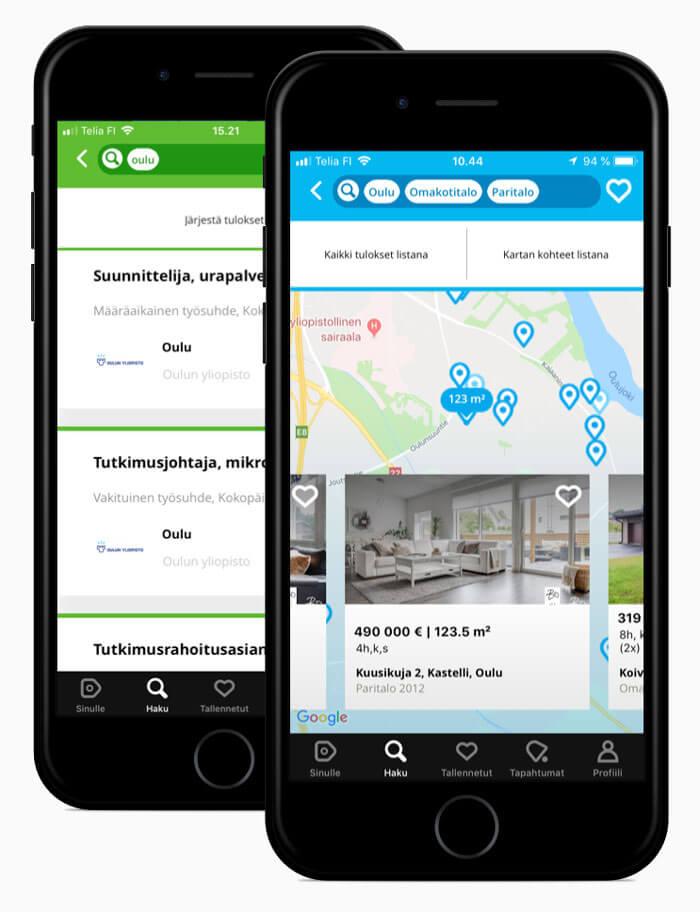

First, this means that a separate integration for each vertical is needed. Second, the role of the new service is to find from these verticals the content that is relevant to a user. As the content is gathered from different sources, it needs to be transformed into a unified format so that it can be shown to a user, e.g., as items in a personalised feed, see figure. Third, there is relatively a lot of media content in the service so caching plays a big role to keep the performance at a high level.

Because of a large number of integrations and the significant workload related to them, means for simplifying the integration process were needed. Instead of connecting and authenticating to each vertical separately, an abstraction layer on top of the vertical APIs was used. The abstraction allowed access to all the APIs through a single entity and using the same authentication mechanism, which simplified and unified the integration process considerably.

Figure 1: The new Oikotie service shows users content from the verticals, such as apartments and jobs from Oikotie Asunnot and Oikotie Työpaikat, and featured blog content.

…and breaking it down to pieces

To serve around 2 million monthly users, the system must be built to be scalable. Following the common microservice architecture, the system was divided into small loosely coupled services, and each of these services was run in its own Docker container so that each one can be scaled independently. There is one service for the core functionality of the application, and separate microservices for dedicated tasks, such as push notification delivery and handling of incoming events and notifications.

For hosting, the Amazon Web Services (AWS) cloud platform was selected. Furthermore, the AWS Elastic Container Service (ECS) was used for container orchestration.

From the technical perspective, the microservice architecture decouples the components from each other and allows for implementing all the different components using the technology stack that best suits the needs of the component. Yet, there are also non-technical requirements that impact the system design. To guarantee smooth handover and maintainability after the active development phase, there was a strong incentive to implement the new service using technology that is already used in the verticals.

Like so many complex systems, Oikotie is also built of small, simple and familiar building blocks; when you dive in deep, the technical solution you’ll find is a basic LAMP stack with suitable frameworks and SDKs that are required for the integrations. You don’t need to have years of experience to understand or modify the codebase. In all huge systems, including Oikotie, there is always room for developers with different levels of experience.

But does it scale?

Following the AWS best practices, scalability and high availability (HA) were selected as fundamental design principles of the architecture. To be scalable on the application level, all the API requests were made stateless, external message queues were used to decouple the internal components that produce and consume data, user sessions were stored in a shared in-memory database, and a shared relational database was used as a persistent storage. AWS Relational Database Service (RDS) offers off the shelf Multi-AZ deployments and read replicas to provide high availability and scalability. For in-memory database (ElastiCache for Redis), Multi-AZ replication and clustering can be used. Simple Queue Service (SQS) was used as a message queuing service.

On the infrastructure level, the auto-scaling of the service was configured on both the container level (ECS) and server level (EC2). Later, after AWS Fargate was AWS Fargate was made available in Q2/2018, a gradual migration to Fargate has been started for the suitable components of the system. The scalability of the system was verified by load testing that was performed based on the peak traffic metrics received from the analytics of the existing Oikotie products.

Consequently, compared to other services of the same size, the infrastructure costs of the service have been relatively low. This is partly due to efficient caching on different levels of the system, and partly because of the architecture that enables the effective use of allocated resources, and flexible autoscaling of the infrastructure based on the actual need of resources.

First steps of the Oikotie service

The public launch of the new Oikotie service was in May 2018. Now, as the service has been in use for some time, it is good to take a look back and evaluate the design choices and implementation of the selected technical solution.

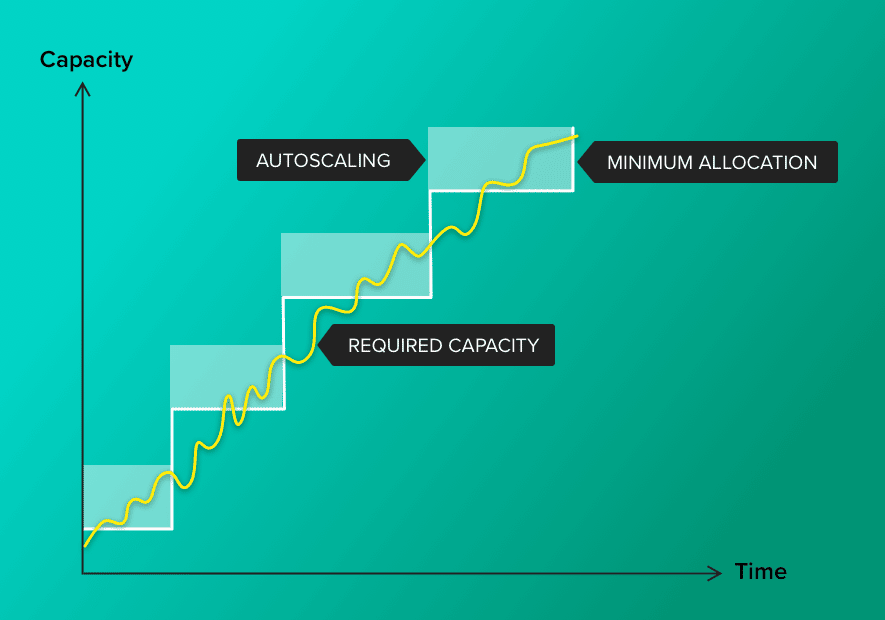

After the launch, new users have constantly flown in and more and more infrastructure has been needed to serve the users. In a short time frame, autoscaling has handled the need for the extra resources. In a longer time span, the minimum allocation of resources has also been increased to handle the higher amount of traffic and system load. Since the launch of the service, a total of hundreds of per cents of both horizontal (number of concurrent instances) and vertical scaling (instance sizes) have been needed for different components of the system. From an architectural perspective, there have been no challenges.

Yet, no system is perfect. Improvements, further development and maintenance are needed as the system, and the needs, evolve. However, the selected architecture and technical solutions provide a good basis for these changes and allow the service to be easily updated, scaled and extended for future needs.

Autoscaling

Autoscaling is a method that is used to automatically reserve more, or free the existing, resources within given bounds. Scaling is done based on a scaling policy that defines when and how much to scale in/out. Autoscaling can be scheduled, or it can be triggered by a given metric, such as CPU utilization or memory usage.

In a system that uses autoscaling, there is a predefined minimum allocation of resources that covers the normal system load. When the demand for resources gets higher, e.g., during the peak traffic hours of the day, the service can automatically scale out and increase the resource allocation. As the load returns back to the normal level, the system scales in and frees the unused resources, which brings savings in infrastructure costs. Autoscaling helps handle variation in the need of resources in a short time frame. When the demand for resources increases permanently, e.g., as the user base of a service grows, the minimum allocation of the resources needs to be increased.

Conclusion

With the new Oikotie service, personalised content from all the different verticals of the Oikotie ecosystem can be shown in a single application. The system is based on the microservice architecture, hosted on AWS, and built to be scalable, fault-tolerant and highly available. The high number of integrations increases the overall complexity of the system, but the selected technical solution divides the service into loosely coupled components that can be managed separately and are, in that way, easier to develop and maintain. Personally, the project has been both challenging and rewarding, and I can proudly say that we have succeeded with our technical solution.

Want to learn more?

Jukka is happy to share the story, how we helped Schibsted and could help you as well.

All articles

All articles